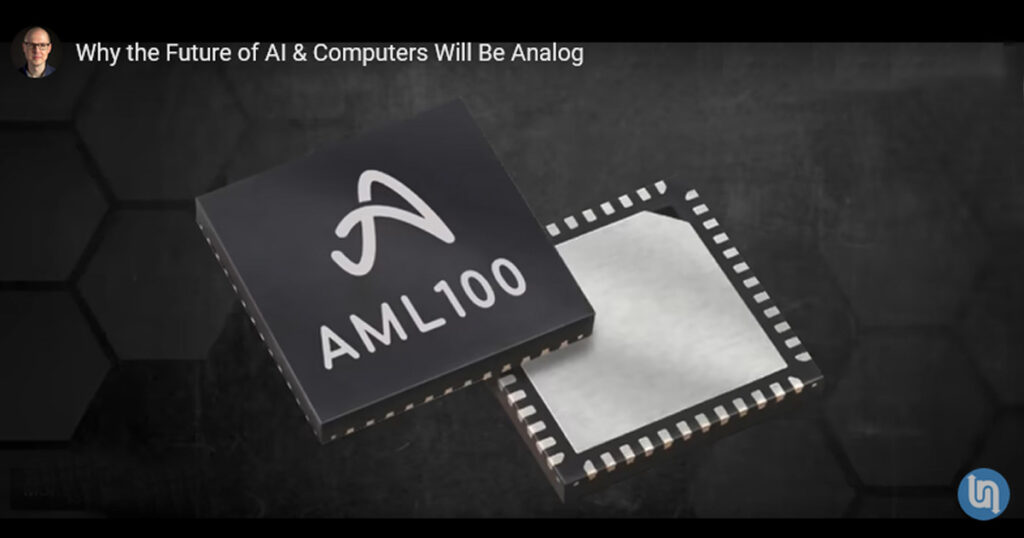

Aspinity’s proprietary semiconductor technology pairs the accuracy of machine learning with the ultra-low power of analog processing to deliver unparalleled performance and efficiency for AI-driven event detection, classification, notification, and prevention.

analog inferencing

<

0

μA

always-on AI system power

<

0

μW

software programmable

0

%

Automotive

Superior accuracy with near-zero power AI enables real-time, discerning insight into contacts, collisions, key scratches, glass breaks, activity, and other security threats for best-in-class parked vehicle monitoring.

Smart Home

Ultra-low power inferencing enables highly accurate AI monitoring of glass break, T3/T4, leaks, etc. for long lasting battery-operated sensors.

IoT

A wide range of IoT devices can accurately monitor and measure voice/keyword, vibration, or other movements — all with ultra-low power.

What’s New

In this episode of Undecided, Matt Ferrell discusses how and why analog is so important for the future of energy efficient AI....

Advisory board members from Kymeta and BCG to provide technical expertise and strategic guidance for Aspinity’s next phase of growth....

Aspinity announces the availability of a new suite of automotive security algorithms and launched a new dashcam evaluation kit....

The Aspinity team is heading to Nuremberg, Germany from April 9-11 for Embedded World 2024. Click below to schedule a meeting with us today and see our latest partner demos....

Aspinity Welcomes Semiconductor Industry Veteran Richard Hegberg as CEO...

We are excited to be featured on the latest episode of the AI Hardware Show with @Ian Cutress and @Sally Ward-Foxton. Tune in to hear more about the AML100. ...